This week, T-SQL Tuesday is being hosted by Jes Borland, and the theme is "Aggregate Functions."

This week, T-SQL Tuesday is being hosted by Jes Borland, and the theme is "Aggregate Functions."

When people think of aggregates, they tend to think of MAX, SUM and COUNT. And occasionally, less common functions such as AVG and STDEV. I thought I would write a quick post about a different type of aggregate: string concatenation. Even going back to my classic ASP days, one of the more common questions out in the community has been, "how do I turn a column into a comma-separated list?"

Over the years, I've used a variety of approaches to solve this problem. For this post I wanted to compare a few different methods, sticking to pure T-SQL and ignoring external solutions such as SQLCLR for brevity. I'm also going to use inline code instead of bothering to put anything into UDFs, so it's clear that function calling etc. is not interfering with the results. I'm sure there is nothing you haven't seen before, but having spotted several recent discussions where people profess things like, "a while loop is more efficient than a cursor," I thought it would be useful to take a quick glance at the performance of each of the common approaches.

The Goal

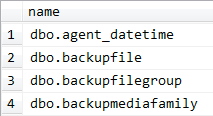

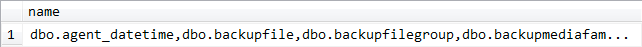

Let's make the goal kind of silly: we want all of the names from msdb.sys.objects, but rather than multiple rows in a single column, we want the names in a single tuple, separated by commas. So instead of:

We want this:

A couple of things to note: we don't need to worry about NULL values in our concatenation, so there will be no special handling for that case, though it may be necessary in your scenario. I also don't need to worry about embedded commas in the data, because I know that none of my objects are named using commas.

The Setup

I placed this code at the top of my script. I wanted a table variable to hold the object names, and some variables that would be re-used through the various string concatenation methods.

USE [msdb]; SET NOCOUNT ON; DECLARE @foo TABLE ( id INT IDENTITY(1,1) PRIMARY KEY, name NVARCHAR(257) ); INSERT @foo(name) SELECT n = OBJECT_SCHEMA_NAME([object_id]) + '.' + name FROM sys.objects ORDER BY n; DECLARE @s NVARCHAR(MAX), @n NVARCHAR(257) @loop INT = 0, @count INT = (SELECT COUNT(*) FROM @foo);

Approach #1a : An "Evil" Cursor

Very early on in my career, my approach would have been one of brute force: use a cursor. These days, when I see people compare a cursor to a while loop, I see them making a very unfair comparison; they use the default options for a cursor, which can be fairly heavyweight depending on concurrency and the rest of your workload. And I'll admit, in those early days, I often just blurted out DECLARE c CURSOR FOR... without any thoughts to options that I should be setting, such as READ_ONLY, FORWARD_ONLY, LOCAL, etc. And at the time didn't know about Itzik Ben-Gan's recommendation to use cursor variables.

I don't want this to blossom into a lesson on cursors and default settings vs. optimal settings (why GLOBAL is still the default, I'll never understand), but I will tell you that I now always use the following syntax:

DECLARE cursor_name CURSOR LOCAL STATIC FORWARD_ONLY READ_ONLY FOR ...

While it's true that FAST_FORWARD is essentially shorthand for FORWARD_ONLY and READ_ONLY, if you try to specify STATIC FAST_FORWARD, you get this error:

Conflicting cursor options STATIC and FAST_FORWARD.

Hugo Kornelis does a great job of comparing the performance of all of the cursor options in this blog post. The two most interesting things I got from this article: (1) STATIC is faster than FAST_FORWARD, and (2) the default options will always result in the slowest possible performance (the latter I already knew, which is why it always irked me when people used the default options to show how slow cursors are). In spite of his assurances that there is no difference between LOCAL and GLOBAL or whether or not you use READ_ONLY, I am probably going to keep using them for documentation and future-proofing reasons.

That all said, here is how many people would use a default cursor to achieve the desired result:

DECLARE c CURSOR FOR SELECT name FROM @foo ORDER BY name; OPEN c; FETCH NEXT FROM c INTO @n; WHILE @@FETCH_STATUS = 0 BEGIN SET @s = COALESCE(@s + ',' + @n, @n); FETCH NEXT FROM c INTO @n; END CLOSE c; DEALLOCATE c;

Approach #1b : An Optimized Cursor

The following cursor yields better performance than a default cursor, even if a few of the specified cursor options may truthfully have no impact.

DECLARE c CURSOR LOCAL STATIC FORWARD_ONLY READ_ONLY FOR SELECT name FROM @foo ORDER BY name; OPEN c; FETCH NEXT FROM c INTO @n; WHILE @@FETCH_STATUS = 0 BEGIN SET @s = COALESCE(@s + ',' + @n, @n); FETCH NEXT FROM c INTO @n; END CLOSE c; DEALLOCATE c;

I also see people suggesting that DEALLOCATE is sufficient, and that you don't need to CLOSE a cursor. For a long time, I would have agreed with them; however, it was pointed out to me last year (and I apologize, I tried to find the discussion, but failed), that you can actually observe some additional overhead if you fail to issue a CLOSE. I have plenty more to say about cursors, but I want to focus on the topic at hand, and will revisit these issues in a future blog post.

Approach #2 : A While Loop

A statement I hear a lot: "Don't use a cursor; use a while loop. They're faster." A loop is a loop; an iterative approach has the same performance limitations whether or not you explicitly use DECLARE CURSOR / FETCH. The while loop is a little tidier than the cursor, but relies on the IDENTITY column to iterate:

WHILE @loop < @count BEGIN SELECT TOP 1 @s = COALESCE(@s + ',' + name, name) FROM @foo WHERE id > @loop ORDER BY id; SET @loop += 1; END

Approach #3 : An Undocumented / Unsupported Aggregation

This is probably the approach I've used the most in my career – it's essentially a cursor disguised as a set-based operation. I believe I first learned this approach from Anith Sen over a decade ago (however, it can't be relied on in modern versions).

SELECT @s = COALESCE(@s + ',' + name, name) FROM @foo ORDER BY name;

Approach #4a : FOR XML PATH

When SQL Server 2005 was released, we were introduced to a much more powerful set of functionality around XML. One feature was the ability to convert a resultset into an XML string, and this was quickly exploited to join strings together without any XML tags at all, as follows:

;WITH x(x) AS ( SELECT name AS [data()] FROM @foo ORDER BY name FOR XML PATH('') ) SELECT @s = REPLACE(x, ' ', ',') FROM x;

Approach #4b : FOR XML PATH, TYPE

I picked up this approach from a comment by RBarryYoung on an old blog post from Adam Machanic ("Rowset string concatenation: Which method is best?"). It is similar to the above but uses value() and TYPE for extracting the string values from the XML. I just couldn't figure out how to pull the values out with the comma separator without having to hack off the last comma.

SELECT @s = ( SELECT name + ',' FROM @foo ORDER BY name FOR XML PATH(''), TYPE ).value('.[1]', 'NVARCHAR(MAX)'); SELECT @s = LEFT(@s, LEN(@s)-1);

Performance Test

I ran each of these code segments 1,000 times (script attached below). I also noted in Hugo's research that the situation can be even worse for the WHILE loop when the IDENTITY column is not the clustering key, so I tried the script again with the PRIMARY KEY on the name column of the table variable instead of the id column. Below are all of the results:

| IDENTITY = PK | name = PK | |||

|---|---|---|---|---|

| Total Duration (milliseconds) |

Average Duration (milliseconds) |

Total Duration (milliseconds) |

Average Duration (milliseconds) |

|

| 1a : Default CURSOR | 105,060 | 105.1 | 104,843 | 104.8 |

| 1b : Optimized CURSOR | 84,710 | 84.7 | 85,013 | 85.0 |

| 2 : WHILE Loop | 113,753 | 113.8 | 516,136 | 516.1 |

| 3 : Set-Based "Cursor" | 47,640 | 47.6 | 46,750 | 46.8 |

| 4a : FOR XML PATH | 7,113 | 7.1 | 6,753 | 6.8 |

| 4b : FOR XML PATH, TYPE | 2,486 | 2.5 | 1,813 | 1.8 |

Conclusion

Clearly, at least in this specific scenario, the XML methods are far superior to any iterative approach. The disadvantage of the XML methods is that, like MERGE and other new syntax constructs, they are difficult to memorize and get right without referencing existing, working code. But if your goal is performance, the up-front and maintenance cost is going to be worth it in the long run. If you are working on a one-off, then the set-based concatenation (Approach #3) is likely a good trade-off. But in very single test, the WHILE loop is the worst performer. Perhaps there is some flawed logic in how I am constructing my loop; there are many ways to do it, this one just seemed the most intuitive and, more importantly, most efficient – at least among the typical WHILE loop methods.

I hope these results are something you keep in mind the next time you need to perform string concatenation in T-SQL, and you don't have the luxury of doing it elsewhere (e.g. in the application tier or in SQLCLR).

- File Attachment: TSQLTuesday_16.sql.zip

The other advantage of #4B over #4A is that #4B will gracefully handle characters that would get encoded by XML, like less-than, greater-than, and ampersand. Method #4A will end up encoding them, so A&P would end up in the list as A&P.

–Brad

Only because aggregates frequently involve grouping by another field, which would need including with every row. In this specific example there's no grouping info, so the overhead is minimal.

Why is it passing more data? If I send the query results from the first graphic to the application, it's the same amount of data, it's just in multiple rows instead of a single row. In fact I'm not sure if the extra data in all those commas outweighs the row overhead data.

Typically the application wants the row-by-row data also (or instead), as it is often going to have to loop over the data to display it, and arguably it is easier/more intuitive to loop using a recordset-type object than splitting a comma-separated string (especially if commas can be embedded in the data). But I would guess that with JSON, Ajax etc. the comma-separated variety is becoming more useful.

Yeah, ok. I just use STUFF to get rid of the leading comma, and find that it's much nicer than applying COALESCE each time.

Doing it in the application layer means doing grouping there too, which isn't so nice. SSRS can do it okay, but it's still passing a lot more data than is preferred.

Adam, yes, I know there is CLR. I mentioned it multiple times, but I am still finding there is a LOT of resistance to it out in the wild.

Rob, setting @s to NULL initially (notice it's in the DECLARE but no value is assigned) means that…

SET @s = COALESCE(@s + ',' + name, name)

…will, on the first row, become simply <name>. On subsequent rows, it will append a comma before appending the next name. I did take care to mention that in this case I do not need to worry about NULLs in the data, so there is no concern that the value will reset. If I simply set @s to the empty string, then the first value would have a leading comma… not overly messy, but what I have already works for this scenario.True about the ORDER BY, it's kind of like the clustered index, it

"usually" just works. Notice that I didn't make any disclaimer that the

order by was guaranteed, nor did I imply that the order of the

comma-separated string was important. It's just one additional reason

that 4b is the best (at least non-CLR) solution. Better yet is likely to build the string in the application layer, but we know there is some resistance to that as well.

(and, just for the record – I never use it, because you can get unexpected results. 4b is definitely the way to go, because FOR XML supports the ORDER BY clause – none of the others do.)

Watch out for your COALESCE calls there, Aaron.

You have: @s = COALECSE(@s + ',' + name, name)

For (@s + ',' + name) to return NULL, you'd need either @s or name to be NULL.

Presumably, you'd never want @s to be NULL if that's your running total. If you're thinking about the start, then you could set it to the empty string before you run your query.

On the other hand, if name is NULL, then you don't want to set @s to be name – that would reset your concatenated string.

So… perhaps you mean:

@s = @s + ',' + COALESCE(name,'<NULL>')

or

@s = @s + COALESCE(',' + name,")

Or, if your name column is constrained to NOT NULL, simply:

@s = @s + ',' + name

I've seen that method described as "The COALESCE method for string concatenation", but unfortunately it's not contributing anything to the process. I'd rather just use SET @s = " after declaring it. 😉

Rob

There is one more, SQLCLR 🙂 … I don't know how it compares but I suspect FOR XML is faster for smaller strings and SQLCLR for larger ones.

I wouldn't have guessed that 4b would be so much better than 4a. I use 4a type queries all the time in conjunction with STUFF command, taking care of the leading/trailing ',' as well.

SELECT @s = STUFF(( SELECT ',' + name FROM @foo ORDER BY name FOR XML PATH ("), TYPE).value('.[1]', 'NVARCHAR(MAX)'), 1, 1, ")

Looks good, thanks Jason.

On "Approach #4b", you can move the comma to the front of the name column and then use STUFF to remove the comma.

Like this:

SELECT @s=STUFF((SELECT ','+name

FROM @foo

ORDER BY name

FOR XML PATH(")

,TYPE).value('.[1]', 'NVARCHAR(MAX)'),1,1,") ;