This month's installment of T-SQL Tuesday is hosted by Pat Wright. Pat says: "So the topic I have chosen for this month is Automation! It can be Automation with T-SQL or with Powershell or a mix of both. Give us your best tips/tricks and ideas for making our lives easier through Automation."

This month's installment of T-SQL Tuesday is hosted by Pat Wright. Pat says: "So the topic I have chosen for this month is Automation! It can be Automation with T-SQL or with Powershell or a mix of both. Give us your best tips/tricks and ideas for making our lives easier through Automation."

In a recent project, we've had a need to run concurrent workloads on as many as 100 instances of SQL Server in a test environment. A goal, obviously, is to accomplish this without having to go and manually run the load tests on each of the 100 servers. Another goal, since this is for the purposes of measuring overhead (including network load), is to not pollute the tests with thousands of RPC and batch calls going over the network. This means trying very hard to keep as much of the work "local" as possible.

Now, we could set up SQL Server Agent jobs on each server that run the local workloads. That would eliminate the impact of network overhead that would be caused by running the loads remotely, but one of the goals is to minimize not only network overhead but also the overhead on the machines themselves – Agent would add overhead just by running the jobs, never mind adding all that history and cleanup for msdb. We wanted the impact on the local machine, compared to sitting idle, to represent only the stress caused by the commands themselves, to isolate that from the overhead caused by how we chose to run the commands.

So, we turned to PowerShell remoting, which allows you to run PowerShell commands from a different server just as if you were running locally.

Getting the Environment Ready

As I described earlier, we've had several occasions where we need to run workloads against 100 instances of SQL Server. We build these VMs using PowerShell, and to ease scripting and other automation around them, give each VM a name in a numerical sequence: VM-SQL-001, VM-SQL-002, …, VM-SQL-099, VM-SQL-100. Building out the VMs using a template, with a default SQL Server instance already installed, is relatively straightforward in Hyper-V; the only tricky part is that we had to write a script to change @@SERVERNAME, using sp_dropserver/sp_addserver, on all of the SQL Server instances (they all have unique Windows names, but SQL Server itself starts with the server name of the original template).

After building the 100 VMs with PowerShell, coming up with a .sql script that would run a suitable workload, and then using PowerShell to blast those .sql files to all 100 VMs (we could have pulled a single script over the network at runtime, but that would add overhead), we were ready to go. But first, in order to use remoting, you have to do the following things:

Configure WinRM

Luckily, we are standardized on Windows Server 2008 R2, so we did not have to install WinRM. If you are on Windows XP or Vista, or Windows Server 2003, 2003 R2, or 2008, you will need to install WinRM before proceeding:

The install is simple, though you'll likely need to reboot. Configuring the service to start automatically is part of the next step.

Enable remoting

In PowerShell, remoting is not enabled by default. In order to turn WinRM on, keep it on, and set up an automatic firewall extension, you need to run the following command from a PowerShell prompt:

Enable-PSRemoting;Set TrustedHosts

So that other servers can connect and run commands, you need to "let them in" … this works kind of like a firewall exception. You can of course be more restrictive than using * (which lets all systems in) but this will depend on the existing security in your environment.

Set-Item WSMan:\localhost\Client\TrustedHosts * Restart-Service WinRM;

For the virtual machines we created, this step had already been performed in the VM that served as the Hyper-V template, so the process did not need to be repeated 100 times. If you need to monitor servers that already exist, you'll need to enable remoting and set TrustedHosts on each of them. Thankfully, you'll only ever have to do this once; from then on, you can accomplish just about anything using PowerShell remotely.

The Script(s) to Rule Them All

Once the environment was configured, we would need a total of three scripts – and one command line call – to run the workload against anywhere from 1 to 100 SQL Server instances. The three scripts are:

- A .sql script with the actual commands to run; actually, 100 copies of this, located in C:\scripts\ on each VM. Make sure that all T-SQL commands have proper statement terminators (;).

- A .ps1 script that will be run remotely and load each local .sql script to execute. Again, this needs to be located in C:\scripts\ or a similar local folder on each VM.*

- A single .psm1 script on the local machine that will load as a module and start the sessions / jobs to fire each .ps1 script on each VM in turn.

The .sql script has a whole bunch of commands to generate a variety of activity on the SQL Server instances on the remote VMs – from blocks and deadlocks, to significant durations, to compiles and recompiles. I'm not going to show the script here because that's not the important part.

On its own, the script is only capable of generating so much load – however, when you can run multiple copies of the script in parallel, you can really make it look like an interesting and real-world workload. We accomplished this by establishing a session on each remote VM, then creating a separate job in each session, for each "thread" that we wanted to run the script. This is where the coolness of automation comes in.

The .ps1 script

This script (RunWorkLoads.ps1) accepts two arguments: $cycles and $delay. $cycles represents the number of times we want to run the workload. If I wanted to run the workload for a specific amount of time, rather than provide additional timing parameters, I was content with experimenting with the workload script itself and the number of cycles in order to run for at least that duration. $delay is the number of milliseconds I wanted to wait in between running the command… this provides the ability to tailor the activity against the servers according to realistic application behavior.

param([int]$cycles, [int]$delay) function RunWorkLoads { param ( [int]$cycles, [int]$delay ) $query = Get-Content C:\scripts\MyWorkload.sql; $query = [string]::join(" ", $query); for ($i = 1; $i -le $cycles; $i++) { Start-Sleep -Milliseconds $delay; Invoke-Sqlcmd -Query $query -ServerInstance localhost -Database AdventureWorks; } } LoadSQLSnapin.ps1 RunWorkLoads -cycles $cycles -delay $delay;

The .psm1 script

This module (RunWorkLoadsOnVMs) loads with the PowerShell command prompt (or with the ISE), so that we can just call the function within the module without having to point PowerShell at a folder. This is all about reducing our work, right? The tricky part is getting the path information right. As mentioned above, the .sql and .ps1 scripts need to be on the target machines (and again, I didn't fight too hard to try to make this work without distributing the files – though I'm sure a solution exists). Coming back to the automation argument, how easy is it to copy these scripts over to the target machines? With the naming scheme we chose (VM-SQL-001 -> VM-SQL-100), it is quite easy:

for ($i = 1; $i -le 1; $i++) { $vm = "VM-SQL-"; $x = "000" + ($i + 1).ToString(); $vm += $x.Substring($x.Length - 3, 3); Copy-Item C:\Scripts\RunWorkLoads.ps1 "\\$vm\C$\Scripts\"; }

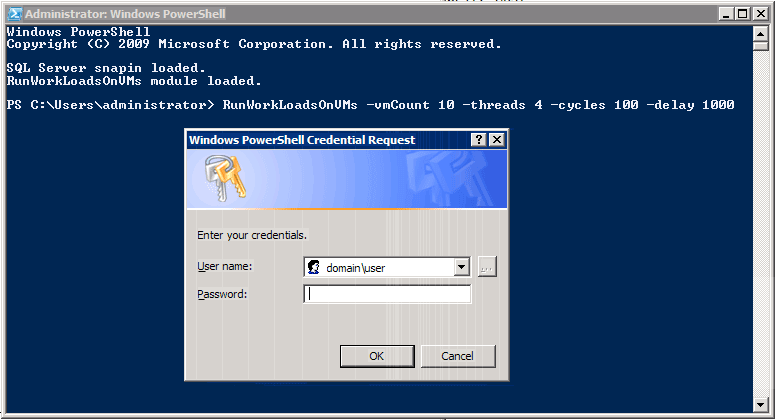

One other tricky part you may come across is the need to use CredSSP to pass the credentials of a domain user with the rights to run commands against the remote servers. In our case, this meant that when starting the command we would need to enter the password for the domain user once (but, hey, not 100 times – PowerShell++).

The module takes these four parameters: $vmCount (the number of VMs you want to run load tests against), $threads (the number of threads you want on each machine), $cycles and $delay (both described above). It uses multi-dimensional arrays to keep track of the job and session for each thread on each machine. Note that I would normally use much more descriptive variable names, but was trying to make sure the script fit on this page horizontally. I am thankful that I didn't have to learn about these session and job nuances the hard way; this was actually a substantial amount of work on the part of Greg Gonzalez (twitter):

function RunWorkLoadsOnVMs { param ( [int]$vmCount = 1, [int]$threads = 1, [int]$cycles = 150, [int]$delay = 1000 ) [int]$vmIdMax = ($vmCount - 1); [int]$SIdMax = ($threads - 1); $S = New-Object 'object[,]' $vmCount, $threads; $J = New-Object 'object[,]' $vmCount, $threads; $C = Get-Credential -credential domain\user; # will raise cred prompt $P = "C:\Scripts\RunWorkLoads.ps1"; for ([int]$vmId = 0; $vmId -le $vmIdMax; $vmId++) { $vm = "VM-SQL-"; $x = "000" + ($vmId + 1).ToString(); $vm += $x.Substring($x.Length - 3, 3); for ([int]$SId = 0; $SId -le $SIdMax; $SId++) { Write-Host "Starting job $SId on $vm..."; $S[$vmId, $SId] = New-PSSession -ComputerName ` $vm -Name "S$vmId-$sessId" ` -Credential $C -Authentication CredSSP; if ($S[$vmId, $SId] -ne $null) { $jn = "J$vmId-$SId"; $J[$vmId, $SId] = Invoke-Command -Session $S[$vmId, $SId] ` -FilePath $P -AsJob -JobName $jn -ArgumentList $cycles, $delay; } } } Write-Host "Waiting for jobs to complete..."; Get-Job | Wait-Job; for ([int]$vmId = 0; $vmId -le $vmIdMax; $vmId++) { for ([int]$SId = 0; $SId -le $SIdMax; $SId++) { if ($J[$vmId, $SId].State -eq "Failed" ) { Get-Job -Name $J[$vmId, $SId].Name; } } } $ErrorActionPreference = "Continue"; Write-Host "Cleaning up sessions..."; Get-PSSession | Remove-PSSession; Write-Host "Cleaning up jobs..."; Get-Job | Remove-Job; } Write-Host "RunWorkLoadsOnVMs module loaded.";

And here is a sample call with the resulting credential prompt:

A word of caution: always let the script finish and perform its own cleanup. If you hit Ctrl+C while these jobs are running, you will have to go back and clean up the jobs and sessions yourself. PowerShell may make this a bit easier than it could be, but it's still going to be a pain.

Conclusion

Are there 3rd party tools to allow for some of this functonality? Sure. There are all kinds of stress testing tools available (even several free ones), and we could have chosen one of dozens of existing methods to accomplish our goals. But we did not come across any that provided all of the flexibility we needed to facilitate the type of testing we wanted – never mind in a single command line.

For load testing in a simulated environment, our naming scheme made it very easy to scale my tests to any number of the 100 VMs. If you have no control over the server names, there wouldn't be much you'd have to change – instead of looping through a simple counter, you'd have to pull the list of server names from a source somewhere, such as a text file.

Many thanks again to Greg Gonzalez for doing much of the legwork and pointing me in the right direction many times throughout this project.