TL;DR: Windows Clustering no longer requires identical hardware.

Because you can now easily create Windows clusters with different metal, I hear more and more about environments where nodes in a cluster are vastly different. Whether this is because they can only deck out one server at a time, or because they need to mix older and newer hardware, it is becoming more and more commonplace for shops to set up this High Availability (HA) benefit even with dissimilar hardware. I am no longer shocked when I see Active/Active multi-instance cluster scenarios where one node has 8GB or 16GB of RAM, and the other node has 32GB. Typically in these environments, their SQL Server instances "favor" one node or the other (the important / resource-hungry instances get the "better" node, and the less important / less demanding instances still run on the "worse" node). This is under ideal conditions, when both nodes are up.

But what if one node fails?

After all, why have the cluster configured this way at all, if the instances will crush the server when they both end up on the same node? That sounds like the opposite of HA to me. What people tend to do is *react* to such a failover, and go in and manually reconfigure one or both instances to make more fair usage of the resources of the node they failed to (by going in and reconfiguring max server memory, processor affinity, etc.). So there are two important questions in the event of a failover: (a) are both instances on the same node? and (b) are they both on the "worse" node? Obviously they need to share resources if the answer to question (a) is yes, but if the answer to question (b) is also yes, then it is a much more precarious balance, because the server will have trouble handling the more demanding apps in addition to its typical load.

"That sounds cumbersome." A.K.A.: "I'm lazy."

Yes, you should question the manual approach. And no, I don't really mean you're lazy if you do. I have seen the following question several times now, relating to this scenario: "How can I automate this decision-making process? I don't want to get up at 3 AM when there is a failover, and have to manually figure out which node we're on, and how these instances should be configured on that node." In addition, they don't want to have to manually reconfigure everything one more time, once the other node is up again and they are safe to fail one of the instances back over to its preferred node.

A simple example

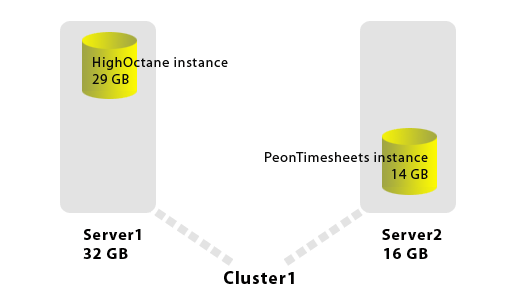

So let's say you have the scenario I described above: one cluster (Cluster1) with two physical nodes. Server1 has 32 GB of RAM, and an instance named "HighOctane." Server2 has 16 GB of RAM, and an instance named "PeonTimesheets." The HighOctane instance is set to fail over to Server2, and the PeonTimesheets instance is set to fail over to Server1. In the normal case, we want to give the HighOctane instance 29 GB of RAM (leaving 3 GB for the OS); likewise, the PeonTimesheets instance gets 14 GB of RAM (leaving 2 GB for the OS):

Now, if the PeonTimesheets instance fails over to the "better" node (Server1), we want to make some minor adjustments to the memory settings for each instance. We will leave the OS at 3 GB of RAM, but we want to scale back the HighOctane instance to 21 GB, and give the PeonTimesheets instance 8 GB:

The other more precarious scenario is when the HighOctane instance fails over to the "worse" node (Server2) and is sharing even less resources on that instance. In this case we will leave 2 GB of RAM to the OS, give the HighOctane instance 10 GB, and PeonTimesheets will have 4 GB:

These are just arbitrary numbers, of course. Only you can decide how to best balance limited resources for your applications.

So now we know the rules, but how are we any better off than when we were figuring this out manually at 3 AM?

Using a startup procedure

One idea I had to solve this problem is to use sp_configure for each instance in a startup procedure. My thinking was, from an instance standpoint, I can figure out which node I'm on, and I can figure out which node the other instance is on, so why can't I call sp_configure manually on each instance, once I know where I am and who I'm sharing the node with? I can, of course. And since both nodes won't run through their startup at the same time (since only one node will typically fail over, if things are running as they should), this startup procedure can manually reach out to the other instance and configure it at the same time.

Assuming you have linked servers set up pointing at each instance via the cluster, you can create this procedure on each node (assuming each node has a linked server called "OtherClusterInstance," each of which points to the other "stacked" instance):

CREATE PROCEDURE dbo.OptimizeInstanceMemory AS BEGIN SET NOCOUNT ON; DECLARE @GoodNode NVARCHAR(255), @BadNode NVARCHAR(255), @ThisNode NVARCHAR(255), @OtherNode NVARCHAR(255), @ThisInstance NVARCHAR(255), @command NVARCHAR(MAX), @run NVARCHAR(MAX), @ThisInstanceLoneMemory VARCHAR(5), @OtherInstanceLoneMemory VARCHAR(5), @ThisInstanceSharedMemoryBadNode VARCHAR(5), @OtherInstanceSharedMemoryBadNode VARCHAR(5), @ThisInstanceSharedMemoryGoodNode VARCHAR(5), @OtherInstanceSharedMemoryGoodNode VARCHAR(5); SELECT @GoodNode = N'Server1', @BadNode = N'Server2', @ThisNode = CONVERT(SYSNAME, SERVERPROPERTY('ComputerNamePhysicalNetBIOS')), @ThisInstance = CONVERT(SYSNAME, SERVERPROPERTY('InstanceName')), @ThisInstanceLoneMemory = CASE @ThisInstance WHEN 'HighOctane' THEN '29000' WHEN 'PeonTimesheets' THEN '14000' END, @OtherInstanceLoneMemory = CASE @ThisInstance WHEN 'HighOctane' THEN '14000' WHEN 'PeonTimesheets' THEN '29000' END, @ThisInstanceSharedMemoryBadNode = CASE @ThisInstance WHEN 'HighOctane' THEN '10000' WHEN 'PeonTimesheets' THEN '4000' END, @OtherInstanceSharedMemoryBadNode = CASE @ThisInstance WHEN 'HighOctane' THEN '4000' WHEN 'PeonTimesheets' THEN '10000' END, @ThisInstanceSharedMemoryGoodNode = CASE @ThisInstance WHEN 'HighOctane' THEN '21000' WHEN 'PeonTimesheets' THEN '8000' END, @OtherInstanceSharedMemoryGoodNode = CASE @ThisInstance WHEN 'HighOctane' THEN '8000' WHEN 'PeonTimesheets' THEN '21000' END, @Command = 'USE [master]; EXEC sp_configure ''show advanced options'', 1; RECONFIGURE WITH OVERRIDE; EXEC sp_configure ''max server memory (MB)'', $; RECONFIGURE WITH OVERRIDE; EXEC sp_configure ''show advanced options'', 0; RECONFIGURE WITH OVERRIDE;'; -- find the current node on the other instance: EXEC OtherClusterInstance.[master].dbo.sp_executeSQL N'SELECT @node = CONVERT(SYSNAME, SERVERPROPERTY(''ComputerNamePhysicalNetBIOS''))', N'@node SYSNAME OUTPUT', @OtherNode OUTPUT; IF @ThisNode = @OtherNode BEGIN -- both on same server! IF @ThisNode = @BadNode BEGIN -- both on bad node! SET @run = REPLACE(@command, '$', @ThisInstanceSharedMemoryBadNode); EXEC [master].dbo.sp_executeSQL @run; SET @run = REPLACE(@command, '$', @OtherInstanceSharedMemoryBadNode); EXEC OtherClusterInstance.[master].dbo.sp_executeSQL @run; END ELSE BEGIN -- both on good node! SET @run = REPLACE(@command, '$', @ThisInstanceSharedMemoryGoodNode); EXEC [master].dbo.sp_executeSQL @run; SET @run = REPLACE(@command, '$', @OtherInstanceSharedMemoryGoodNode); EXEC OtherClusterInstance.[master].dbo.sp_executeSQL @run; END END ELSE BEGIN -- each on their own node! -- might want to verify that the right instance -- is on the right server; I'll leave that as an -- exercise to the reader SET @run = REPLACE(@command, '$', @ThisInstanceLoneMemory); EXEC [master].dbo.sp_executeSQL @run; SET @run = REPLACE(@command, '$', @OtherInstanceLoneMemory); EXEC OtherClusterInstance.[master].dbo.sp_executeSQL @run; END END GO

Then, mark it as a startup procedure:

EXEC [master].dbo.sp_procoption 'dbo.OptimizeInstanceMemory', 'startup', 'true';

Other options

Of course you can also adjust other configuration options using the same technique. You may want to restrict processor affinity or degree of parallelism at the server level, both of which you can do the same way using sp_configure. You may be able to do these things from external tools like WSRM, but I don't know how complex you can get with rules such as "when both instances are on server x, do y." Maybe some WSRM expert can chime in about this?

As a side note, you should be made aware when a failover like this has happened; a lot of people let their clusters fail over and back silently, but in this kind of scenario, you want to be on heightened alert especially when both instances are servicing applications from the weaker node. You can set up an e-mail alert in the procedure above that sends an e-mail whenever the startup procedure finds that both instances are sharing the same physical node. You could also use tools like SQL Sentry's Power Suite, which gives you this capability out of the box, and a whole slew of other monitoring, troubleshooting and alerting features.

Another thing you may want to do is combine the above dynamic memory restrictions with other tools, such as the Resource Governor introduced in SQL Server 2008. Once your 29 GB instance has been reduced to 10 GB, you may want to additionally restrict how much resources individual users can use, so they don't overwhelm the now underpowered database engine. You can read more about this in the Resource Governor whitepaper I wrote with Boris Baryshnikov.

Disclaimers

Sadly, I do not currently have a multi-instance cluster to test with, so I am not sure how accurate everything above is; basically, it is just a proof of concept at this point. Hopefully if this scenario applies to you, you can try this out in your test environment and let me know whether it worked and/or any changes that were required.

To avoid over-complicating the code, I did not add any error handling (most importantly, sp_testlinkedserver). You'll also notice my comment where I leave it up to you to make sure that if each instance is on its own node it is actually on the node you expect (I assume that if the temporarily offline node becomes available, the next manual failover is handled correctly). You may need another pair of memory configuration values to handle that case (or send an alert so that someone can manually fail each instance back to their proper node).

Note also that you will have to keep in mind that your startup procedure can be bypassed. Someone could turn the option back off for this procedure using sp_procoption; also, all startup procedures can be bypassed via sp_configure (via option 'scan for startup procs'), through trace flag 4022, or starting SQL Server in minimal config (-f). For more information, see sp_procoption in Books Online).

P.S. thanks to Jonathan Kehayias and Andy Kelly, both of whom provided the prodding necessary to motivate me to throw this blog post together.

Sure, instead of marking it as a startup procedure, you could set it up as a windows scheduled task – a bat file or command-line C# app that simply calls the stored procedure directly. However you'd need to make task scheduler depend on the sql server service for the cluster, otherwise it might fire before the service has started.

Regarding a Managing Active/Active cluster failovers with different hardware

startup procedure

Its useful.

My question is This startup procedure is on instance level,Is there any way to make it as windows server level.

Bcoz I want to setup the memory during a windows server restart only not normal sqlserver instance restart

Regarding a Managing Active/Active cluster failovers with different hardware

startup procedure

Its useful.

My question is This startup procedure is on instance level,Is there any way to make it as windows server level.

Bcoz I want to setup the memory during a windows server restart only not normal sqlserver instance restart

Hey Aaron – I'm trying this sort of thing right now. I've got two machines (DL580 and 380) that will become available to me for such a cluster, in production, in the next month or so. I would like to use them together, so I am testing this sort of technique on a test cluster now. Also have this up at ServerFault

http://serverfault.com/questions/78776/experience-with-asymmetrical-non-identical-hardware-sql-server-2005-win-2003

Thanks for the response. I agree, my line of thinking right now is to add two nodes to the windows cluster, and not even install SQL Server on them. They would purely be there to hold the windows cluster up should we lose two of the three physical nodes running SQL. Hoping to give it a try in the new data center before we cut over to it, but running out of time in a hurry.

I'm personally a little wary of trusting virtualizing SQL Server, even not in a cluster, though it is gaining acceptance amongst some of my peers. I'm not quite ready to trust it like they are. 🙂

As for the C# service, that is probably a more reliable and flexible way to do it. This was more of a proof of concept to show that it *could* be automated from T-SQL if that were your only/easiest option.

Aaron,

I am planning on implementing a very similar solution for a cluster in a new data center we're brining up next month. I go back and forth between this and a fairly simple C# service running on each node monitoring for resource changes and appropriately handling configuration changes as well as notifications. One thing I'm considering is mixing both physical nodes and virtual nodes into my cluster. We happen to have three high octane nodes on a majority node cluster. In a worst case scenario we could configure all instances to run on one node, but the cluster goes down if we lose two nodes. So I'm thinking of adding two virtual machines as nodes to the cluster that could not be owners of any major SQL instances, but would keep the cluster up even if we lost two out of three major nodes. Thoughts, comments, concerns?

Thanks,

Jeff Banschbach