I've talked about illogical errors before. In several answers on Database Administrators (one, two, three), I show how you can use a

I've talked about illogical errors before. In several answers on Database Administrators (one, two, three), I show how you can use a CASE expression or TRY_CONVERT to work around an error where a non-numeric value, that should have been filtered out by a join or other clause, still leads to a conversion error. Erland Sommarskog raised a Connect item over a decade ago, still unaddressed, called "SQL Server should not raise illogical errors."

Recently we had a scenario where a query was failing on one server but not another. But this was slightly different; there were no numerics involved. Imagine this scenario: a source table has a column that is varchar(20). A query creates a table variable with a column that is varchar(10), and inserts rows from the source table, with a filter in place that only exposes values that are 10 characters or less.

In a lot of cases, of course, this scenario is perfectly fine, and everything works as expected.

CREATE TABLE dbo.src(i int, a varchar(20)); INSERT dbo.src VALUES(1,'aaaaa'),(2,'bbbbbbbbbbbbbbb'); DECLARE @t table(a varchar(10)); INSERT @t SELECT a FROM dbo.src WHERE i = 1; DROP TABLE dbo.src;

If you change that to WHERE i = 2;, though, you would get this* error message:

String or binary data would be truncated in table 'tempdb.dbo.#A60B3C91', column 'a'. Truncated value: 'bbbbbbbbbb'.

No surprises here; this is how it should work.

A more complex example

When the schema and the queries get more complicated, the plan becomes important. Here's a slightly more complicated scenario, but kept as simple as possible, to demonstrate the problem:

CREATE TABLE dbo.t1(id int NOT NULL, s varchar(5) NOT NULL); CREATE TABLE dbo.t2(id int NOT NULL); INSERT dbo.t1 (id, s) VALUES (1, 'l=3'), (2, 'len=5'), (3, 'l=3'); INSERT dbo.t2 (id) VALUES (1), (3), (4), (5); GO DECLARE @t table(dest varchar(3) NOT NULL); INSERT @t(dest) SELECT t1.s FROM dbo.t1 INNER JOIN dbo.t2 ON t1.id = t2.id; GO DROP TABLE dbo.t1, dbo.t2;

This yields an error similar to the one we saw earlier:

String or binary data would be truncated in table 'tempdb.dbo.#AC65D70E', column 'dest'. Truncated value: 'len'.

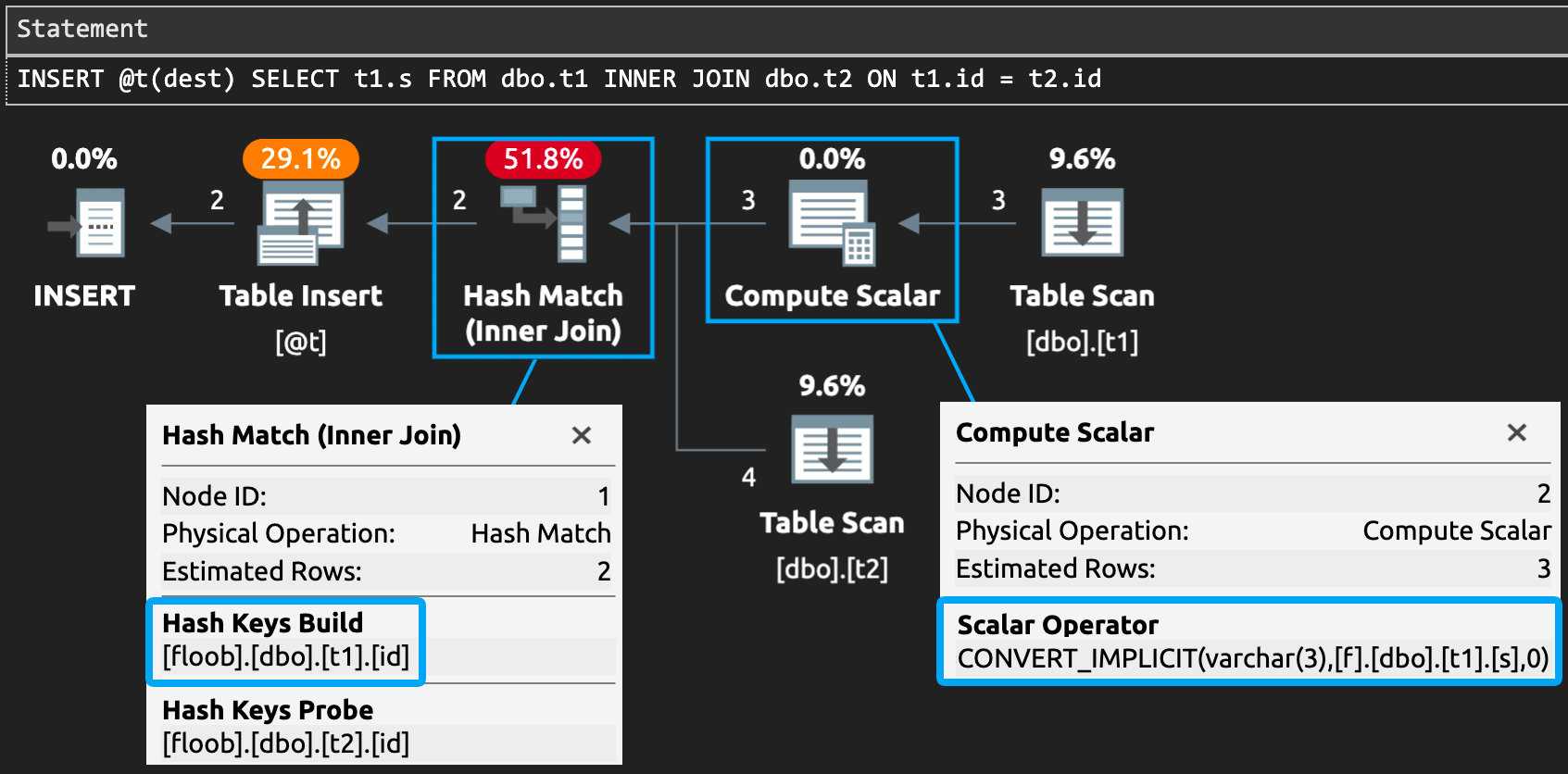

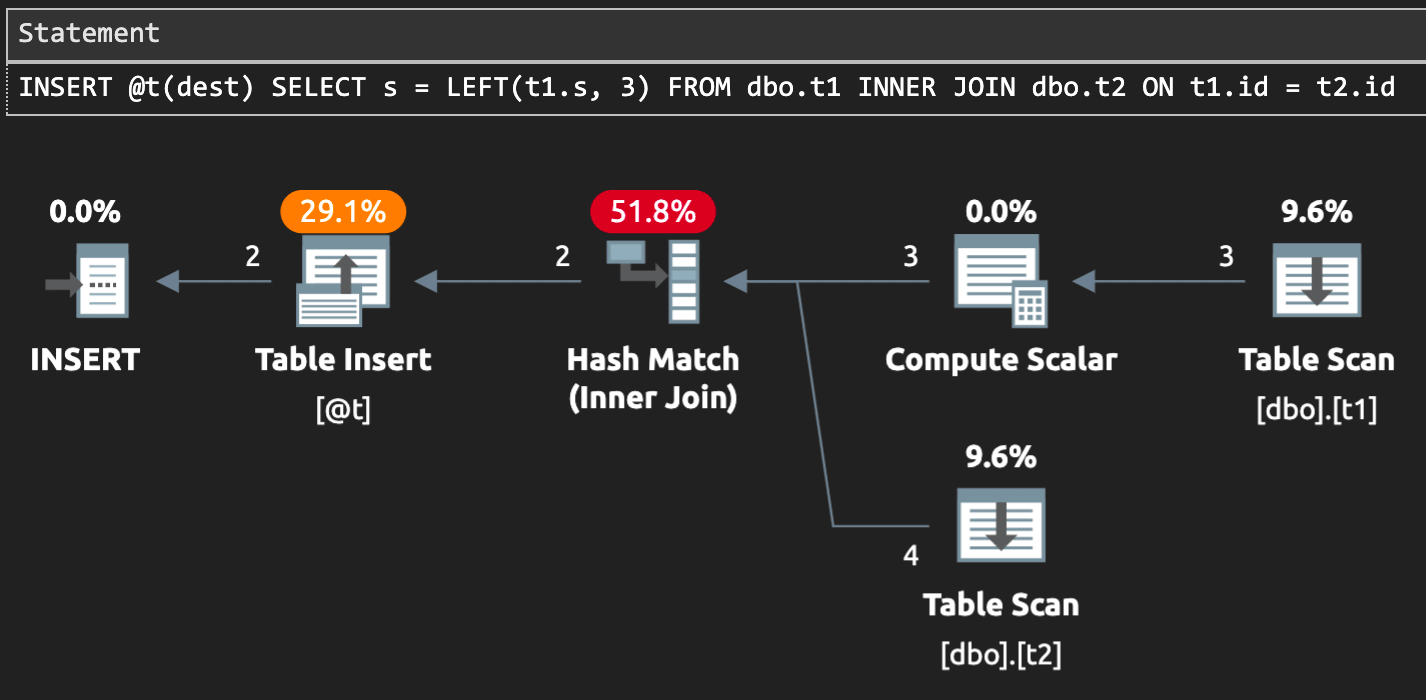

While we should have only retrieved rows with values that fit in the destination column (id is 1 or 3, since those are the only two rows that match the join criteria), the error message indicates that the row where id is 2 was also returned, even though we know it couldn't possibly have been. Let's look at the estimated plan (with apologies for that terminology to Grant, Hugo, and Erin):

Plan derived from "Explain" in Azure Data Studio

Plan derived from "Explain" in Azure Data Studio

You can see the CONVERT_IMPLICIT there, which you might think should have made this error impossible. You can also see that the hash match has only two rows coming out of it, but something missing from diagrams is how many rows go into an operator, and in this case the operator's property sheet reveals that the source table is used to materialize the build side. This is where the error occurs, because the implicit conversion has to be evaluated.

I can show a few ways that, without changing the query, we can make the error disappear (or reappear).

Different Data

With just one change to the source data:

CREATE TABLE dbo.t1(id int NOT NULL, s varchar(5) NOT NULL); CREATE TABLE dbo.t2(id int NOT NULL); INSERT dbo.t1 (id, s) VALUES (1, 'l=3'), (2, 'len=5'), (3, 'l=3'); INSERT dbo.t2 (id) VALUES (1), (3), (4);--, (5); GO DECLARE @t table(dest varchar(3) NOT NULL); INSERT @t(dest) SELECT t1.s FROM dbo.t1 INNER JOIN dbo.t2 ON t1.id = t2.id; GO DROP TABLE dbo.t1, dbo.t2;

This time, the hash match yields no error

This time, the hash match yields no error

We still have a hash match, but notice that the build side and probe side have swapped, a side effect of slightly different data. Because the hash table no longer has to materialize the result of the implicit conversion, no error occurs.

Different Schema

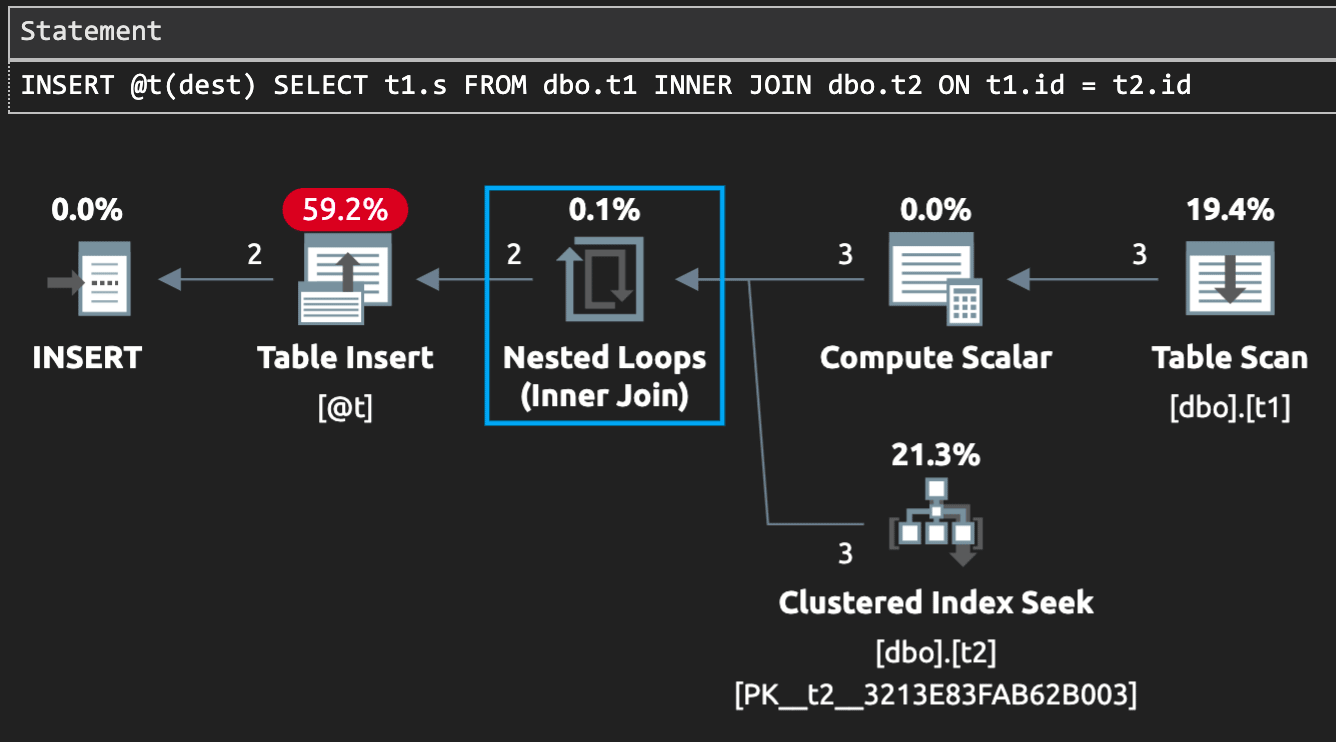

Another "solution" is to add a clustered index to dbo.t2 (and revert to inserting the original 4 rows):

CREATE TABLE dbo.t1(id int NOT NULL, s varchar(5) NOT NULL); CREATE TABLE dbo.t2(id int PRIMARY KEY CLUSTERED); INSERT dbo.t1 (id, s) VALUES (1, 'l=3'), (2, 'len=5'), (3, 'l=3'); INSERT dbo.t2 (id) VALUES (1), (3), (4), (5); GO DECLARE @t table(dest varchar(3) NOT NULL); INSERT @t(dest) SELECT t1.s FROM dbo.t1 INNER JOIN dbo.t2 ON t1.id = t2.id; GO DROP TABLE dbo.t1, dbo.t2;

Here is how the plan changes:

Now we get a nested loops join and no error

Now we get a nested loops join and no error

The CONVERT_IMPLICIT is still there on the compute scalar, but the evaluation isn't forced before the bad row is eliminated, because the nested loops join doesn't have to materialize anything.

Different Query

You could also change the query; for example, you could apply functions like LEFT, SUBSTRING, or an explicit CONVERT (or CAST) to the column:

CREATE TABLE dbo.t1(id int NOT NULL, s varchar(5) NOT NULL); CREATE TABLE dbo.t2(id int NOT NULL); INSERT dbo.t1 (id, s) VALUES (1, 'l=3'), (2, 'len=5'), (3, 'l=3'); INSERT dbo.t2 (id) VALUES (1), (3), (4), (5); GO DECLARE @t table(dest varchar(3) NOT NULL); INSERT @t(dest) SELECT s = LEFT(t1.s, 3) -- or SUBSTRING(t1.s, 1, 3) -- or CONVERT(varchar(3), t1.s) FROM dbo.t1 -- or CAST(t1.s AS varchar(3)) INNER JOIN dbo.t2 ON t1.id = t2.id; GO DROP TABLE dbo.t1, dbo.t2;

The underlying transformation here is implemented as a SUBSTRING, and this gives the optimizer enough information to know that no value could be longer than 3 characters:

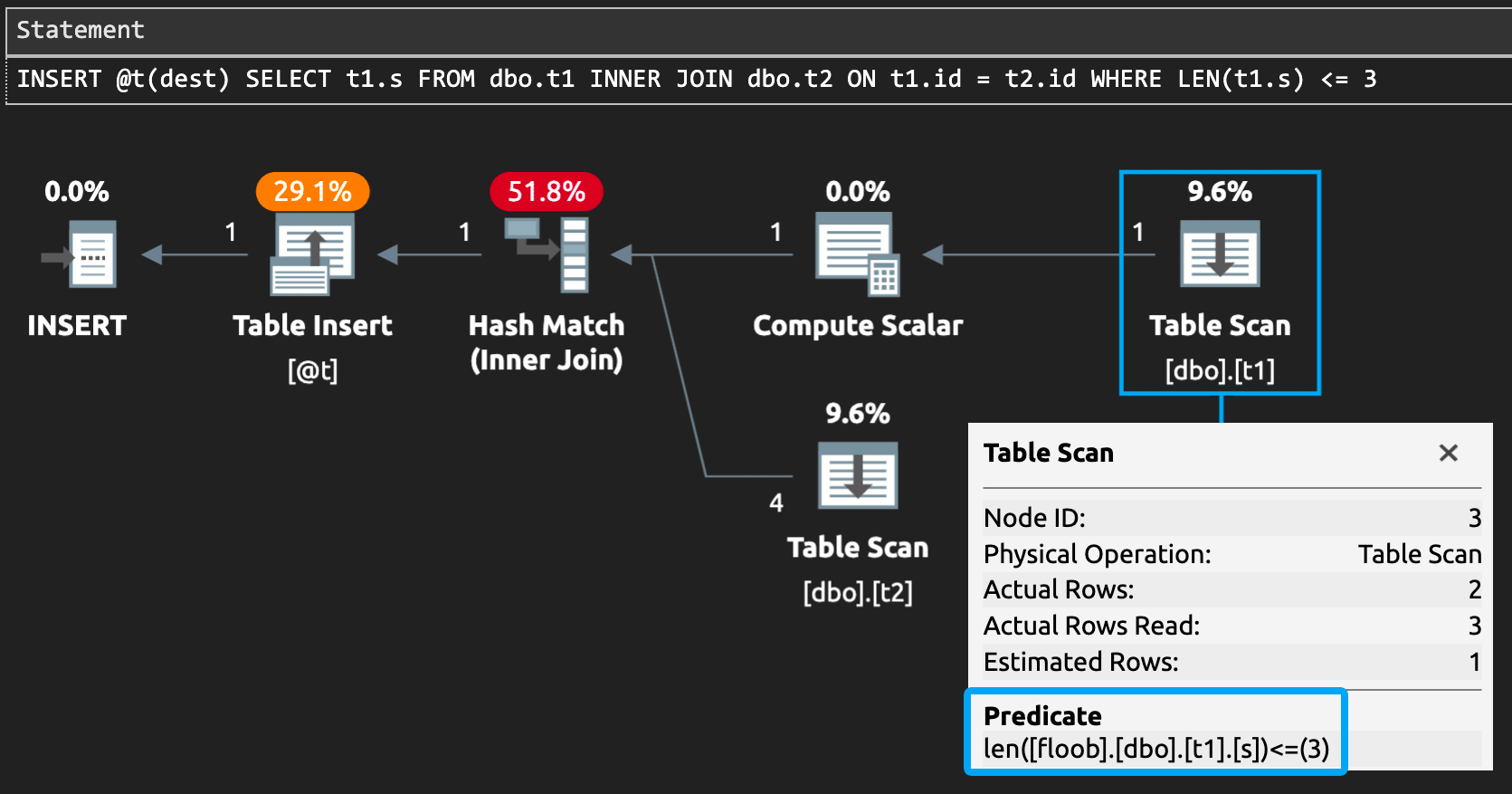

You could also apply a WHERE clause to eliminate the rows explicitly. This works in this specific case but, as demonstrated in the posts above, this won't always work (nor will using a CTE or derived table):

CREATE TABLE dbo.t1(id int NOT NULL, s varchar(5) NOT NULL); CREATE TABLE dbo.t2(id int NOT NULL); INSERT dbo.t1 (id, s) VALUES (1, 'l=3'), (2, 'len=5'), (3, 'l=3'); INSERT dbo.t2 (id) VALUES (1), (3), (4), (5); GO DECLARE @t table(dest varchar(3) NOT NULL); INSERT @t(dest) SELECT t1.s FROM dbo.t1 INNER JOIN dbo.t2 ON t1.id = t2.id WHERE LEN(t1.s) <= 3; GO DROP TABLE dbo.t1, dbo.t2;

The plan is now:

No error with an explicit filter

No error with an explicit filter

In this case, the bad row is filtered out early enough that it becomes irrelevant which way the hash match operates.

You could also try to hint a loop join to avoid the hash match, and probably a half dozen other ways to change the query to either force earlier filtering or eliminate materialization.

Note that some hints may bring the error back, though. For example, in this case, OPTION (FORCE ORDER) (or any hint that preserves order) brings t1 back into the build side of the hash match, and the error returns:

CREATE TABLE dbo.t1(id int NOT NULL, s varchar(5) NOT NULL); CREATE TABLE dbo.t2(id int NOT NULL); INSERT dbo.t1 (id, s) VALUES (1, 'l=3'), (2, 'len=5'), (3, 'l=3'); INSERT dbo.t2 (id) VALUES (1), (3), (4), (5); GO DECLARE @t table(dest varchar(3) NOT NULL); INSERT @t(dest) SELECT t1.s FROM dbo.t1 INNER JOIN dbo.t2 ON t1.id = t2.id OPTION (FORCE ORDER); GO DROP TABLE dbo.t1, dbo.t2;

An ounce of prevention…

A more logical solution is to change the table variable to matching data types or, if longer data doesn't make sense in the source table, fix it at the source. This can have trickle-down effects but, generally, the problem stems from the source allowing values too large. Fix the source, and declarations too big elsewhere are unlikely to cause any issues unless they are populated from other sources.

In the meantime, make sure there isn't some errant data present that is explicitly causing the error, making you chase your tail. It wouldn't be the first time the code was blamed when bad data (or, rather, a schema too permissive) was actually at fault.

Conclusion

My point here isn't to tell you how to work around the error, it's more that you may or may not see the truncation error, even when it doesn't make sense. And that you may see the reverse happen — happily working code may suddenly break when someone adds data, updates statistics, creates or rebuilds an index, or a failover or service restart happens.

The schema and the underlying data can play a part in the plan that you get, and other factors may make you get different plans on different servers or even on the same server at different points in time, even when the schema and data are the same.

Huge shout out to Paul White, whose invaluable input made this post way better than it might have been otherwise.

1 Response

[…] Aaron Bertrand takes us through a problem with seemingly indeterminate query errors: […]