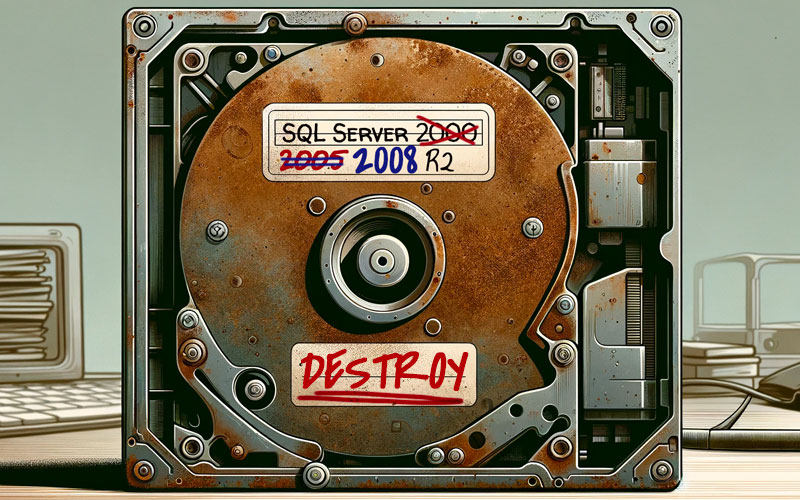

I used to blog about every update to every version of SQL Server – from CTP and RC releases to GDR and security updates to service packs and cumulative updates. Many of those blog posts are no longer relevant, and I've started doing some housekeeping on them.

I also used to keep painstaking lists of all the builds for all the major versions over at sqlperformance.com. Since the SolarWinds takeover, though, that site is well below the bottom of their list, and I predict it will just wither away.

Some other places you can use instead:

- Latest updates and version history for SQL Server

- Microsoft SQL Server Versions List

- SQLServerUpdates.com