It is a common problem… you need to pass multiple values into a stored procedure and, as a result, update multiple rows. Let's pretend we have a table of students:

CREATE DATABASE School; GO USE School; GO CREATE TABLE dbo.Students ( StudentID INT PRIMARY KEY, FullName NVARCHAR(64), ModifiedDate SMALLDATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP ); INSERT dbo.Students(StudentID, FullName) SELECT 1, 'Aaron Bertrand' UNION ALL SELECT 2, 'Kalen Delaney' UNION ALL SELECT 3, 'Adam Machanic' UNION ALL SELECT 4, 'Denis Gobo' UNION ALL SELECT 5, 'Louis Davidson';

Now we let the user click different checkboxes or select multiple items in a multi-select on a web form, and as a result we can pass a comma-separated list back to the database quite easily. (Imagine the procedure is doing other things to this set, but to keep it simple, we are just going to update the ModifiedDate column.)

But once you have the comma-separated list in the database, what do you do next? Most users' first attempt is something like this:

CREATE PROCEDURE dbo.UpdateStudents @List VARCHAR(MAX) AS BEGIN SET NOCOUNT ON; UPDATE dbo.Students SET ModifiedDate = CURRENT_TIMESTAMP WHERE StudentID IN (@List); END GO EXEC dbo.UpdateStudents @List = '1,3,5';

But because IN() is expecting the predicate data type to match the column (or to be a column name or independent variable), you get this error:

Conversion failed when converting the varchar value '1,3,5' to data type int.

Next, people will often try to use dynamic SQL, but then you will have to go to extra lengths to thwart SQL injection, and you won't know for sure if your values are all integers, or even if other invalid characters crept into your list:

ALTER PROCEDURE dbo.UpdateStudents @List VARCHAR(MAX) AS BEGIN SET NOCOUNT ON; DECLARE @sql NVARCHAR(MAX); SET @sql = 'UPDATE dbo.Students SET ModifiedDate = CURRENT_TIMESTAMP WHERE StudentID IN (' + @List + ');'; EXEC master.dbo.sp_executeSQL @sql; END GO EXEC dbo.UpdateStudents @List = '1,3,5,junk_foobar';

Since there is no column named junk_foobar, you get this error:

Invalid column name 'junk_foobar'.

Folks like –CELKO– will tell you that you should pass each value individually into independent parameters. His reasoning: you get data type validation "for free," and the only string splitting mechanisms he has been exposed to do not handle validation (though many do). The problem with Celko's solution is that it is neither scalable nor maintainable. Sure, it might be okay with only a handful maximum StudentIDs supported, as follows:

ALTER PROCEDURE dbo.UpdateStudents @Student1 INT, @Student2 INT = NULL, @Student3 INT = NULL, @Student4 INT = NULL, @Student5 INT = NULL, @Student6 INT = NULL, @Student7 INT = NULL, @Student8 INT = NULL, @Student9 INT = NULL, @Student10 INT = NULL AS BEGIN SET NOCOUNT ON; UPDATE dbo.Students SET ModifiedDate = CURRENT_TIMESTAMP WHERE StudentID IN ( @Student1, @Student2, @Student3, @Student4, @Student5, @Student6, @Student7, @Student8, @Student9, @Student10 ); END GO EXEC dbo.UpdateStudents @Student1 = 1, @Student2 = 3, @Student3 = 5;

Even at 10 StudentIDs, that code is really ugly. But what if you want to be able to update 100, 500, or 5,000 at a time? Do you want me to post a code sample that shows a stored procedure with 500 of those parameters defined? You probably don't even want to see it, never mind manage it. The technical limit for the maximum number of parameters for a stored procedure in SQL Server 2005 and SQL Server 2008 is 2,100 (reference). The practical limit, meaning the most you would *want* to have, I can easily presume is much lower (at least among the sane). Who wants to maintain a stored procedure with 2,100 parameters? And what about the application code that now has to know that the maximum number of parameters is n, and then has to destruct the full list and make multiple calls to the stored procedure, one for each full or partial set of n parameters that has been broken out? Yes, validation is free in this case; but everything else about this solution is expensive. In any case, in spite of Celko's delusions of the contrary, you can split a set of comma-separated integers in a much more elegant fashion, and have validation as well. The rest of this post will describe the method I favor.

Populate a Numbers table

First things first; if you don't already have a numbers table in place, you should create one, even if you don't plan to implement anything else in this post. (This auxiliary table can be immensely useful; I've been writing about its virtues for years, and still have a hard time convincing people that one extra table is not the end of the world as we know it.) The code below will create a numbers table and populate it for you. On my system, one cross join yields 16,129 rows, and two cross joins yield 2,048,383 rows. If you need < 16,000 rows, then you can comment out the second CROSS JOIN statement. If you need more than 2 million rows in your numbers table, good luck — I added a 4th instance of sys.objects, and killed it after several minutes. I'm not sure if you'd want to go over the limit of the INT data type for something like this, but you know your requirements better than I do. For the situation we're talking about here, let's assume that the practical upper bound for the number of selected students is 10,000.

SET NOCOUNT ON; DECLARE @UpperLimit INT; SET @UpperLimit = 10000; WITH n AS ( SELECT rn = ROW_NUMBER() OVER (ORDER BY s1.[object_id]) FROM sys.objects AS s1 CROSS JOIN sys.objects AS s2 CROSS JOIN sys.objects AS s3 ) SELECT [Number] = rn - 1 INTO dbo.Numbers FROM n WHERE rn <= @UpperLimit + 1; CREATE UNIQUE CLUSTERED INDEX n ON dbo.Numbers([Number]);

Create a splitting function

There are many ways to skin this cat. Most involve cursors (or loops in disguise); this one doesn't. It uses a table-valued function and the numbers table to find each item in the list (and uses DISTINCT to avoid extra work from negligent duplicates).

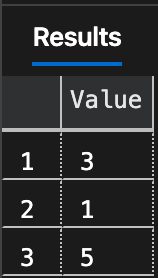

CREATE FUNCTION dbo.SplitInts ( @List VARCHAR(MAX) ) RETURNS TABLE AS RETURN ( SELECT DISTINCT [Value] = CONVERT(INT, LTRIM(RTRIM(CONVERT( VARCHAR(12), SUBSTRING(@List, Number, CHARINDEX(',', @List + ',', Number) - Number))))) FROM dbo.Numbers WHERE Number <= CONVERT(INT, LEN(@List)) AND SUBSTRING(',' + @List, Number, 1) = ',' ); GO SELECT [Value] FROM dbo.SplitInts('1,3,5');

The explicit CONVERT to INT gives us our "free" validation. If we pass in a non-integer value or other garbage, we get an error message:

SELECT [Value] FROM dbo.SplitInts('1,3,5,junk_foobar');

Conversion failed when converting the varchar value 'junk_foobar' to data type int.

Fix the procedure

Now that we have a function that will return a table of values that we KNOW are integers, the stored procedure is quite easy to code. I could have gone for a proprietary UPDATE FROM here, but I figured it would be best to use a more straigthforward IN() clause.

DROP PROCEDURE dbo.UpdateStudents GO CREATE PROCEDURE dbo.UpdateStudents @List VARCHAR(MAX) AS BEGIN SET NOCOUNT ON; UPDATE dbo.Students SET ModifiedDate = CURRENT_TIMESTAMP WHERE StudentID IN ( SELECT [Value] FROM dbo.SplitInts(@List) ); END GO EXEC dbo.UpdateStudents @List = '1,3,5';

We could wrap a TRY/CATCH around that UPDATE statement to give the user a more friendly message (something we can't do from inside the function, and which we wouldn't want to anyway, because the function can be used for other entities besides Students):

ALTER PROCEDURE dbo.UpdateStudents @List VARCHAR(MAX) AS BEGIN SET NOCOUNT ON; DECLARE @msg VARCHAR(MAX); BEGIN TRY UPDATE dbo.Students SET ModifiedDate = CURRENT_TIMESTAMP WHERE StudentID IN ( SELECT [Value] FROM dbo.SplitInts(@List) ); END TRY BEGIN CATCH SELECT @msg = ERROR_MESSAGE(), @msg = SUBSTRING ( @msg, CHARINDEX('varchar value ''', @msg) + 14, LEN(@msg) - (CHARINDEX('varchar value ''', @msg) + 14) - CHARINDEX('''', REVERSE(@msg)) + 2 ); RAISERROR('Value for StudentID %s was invalid.', 11, 1, @msg); END CATCH END GO EXEC dbo.UpdateStudents '1,3,junk_foobar';

Now when we run the faulty code, we get this error message:

Value for StudentID 'junk_foobar' was invalid.

If we want to simply ignore any garbage that is passed in, and only return values that are actually present in our numbers table, we can change the function like this:

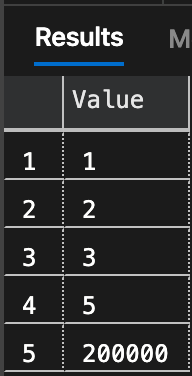

CREATE FUNCTION dbo.SplitInts ( @List VARCHAR(MAX) ) RETURNS TABLE AS RETURN ( SELECT DISTINCT [Value] = [Number] FROM dbo.Numbers WHERE CHARINDEX ( ',' + RTRIM([Number]) + ',', ',' + REPLACE(@List,' ','') + ',' ) > 0 ); GO SELECT [Value] FROM dbo.SplitInts('1,3,5,junk,200000,2');

If we want to support more than 10,000 items in @List, we don't have to touch the code, we just have to make sure that we add enough rows to the dbo.Numbers table.

Other options

You may find value in Tony Rogerson's solution, which has a couple of nice features, such as indicating which positional value failed validation. Erland Sommarskog also does a very thorough treatment of the topic in this article. If you want to process sets of delimited values (e.g. 'foo|bar,blat|splunge,goo|ber'), you can see my post on Google groups. Finally, if you are using SQL Server 2008 only, then I also suggest you take a look at Table-Valued Parameters.

Wow what a great post! I like it even more because it's not just a technique for processing a list of integers. You've got stuff in there about a numbers table, TRY CATCH and RAISERROR, all of which have sent me down different roads in terms of learning new stuff. I also like the consideration of code that is scalable and easy to maintain; something I find that is becoming more and more relevant to me the more code I write.

Thanks Aaron!

Aaron,

Gotta say it again… I know it's a relatively old blog but well done not only on the blog post, but on the way you're taking the heat. Hang in there. Like "Red Green" says, "We're all in this together and I'm pullin' for ya!"

–Jeff Moden

Heh… What a rock and a hard spot! 😉

When they first announced that CLR (I guess it's more appropriately called "SQLCLR", thanks Adam) was going to be available in 2k5, I started thinking of all the wonderful things that could be done with it to fill in the "holes" for SQL Server. I personally still think that it's a great tool when used properly. If you want to see some great proper usage, Adam's blog entries are just loaded with good ideas and code and I strongly recommend you give them a look.

On the other hand, I have to also say that Aaron is spot on. Right, wrong, or indiffernet, whether it's because of an "arbitrary" management requirement to not use them, an attempt to limit the number of languages being used in a shop (especially a "Java only" shop like Aaron pointed out), or a fair amount of FUD on the part of a DBA (or, maybe, a DBA who's just a bit lazy), if CLR can't be used because of edict, then you'd better know a "T-SQL Only" or at least an "SQL Server Only" (ie: no external code) solution to get the job done and it better be a good one.

For some of you that know me from SQLServerCentral.com, you probably think I'm "anti-CLR". Nothing could be further from the truth. What I am against is people using CLR's to make up for their (sometimes extreme) lack of knowledge about T-SQL and SQL Server like the story I heard from a DBA friend about a developer that wrote a CLR to do an "upsert/merge" because he couldn't figure out how to do it in T-SQL. I also have personal experience with one lad that wrote a CLR to do a "modulo" because he couldn't figure out how to do it in T-SQL.

Like Cursors, While Loops, and recursive CTE's, CLR's have a wonderful purpose in life… you can't ask for a better tool when it's appropriate. The problem is that a lot of people just don't understand when it's appropriate. And, like I said before, if they're simply not allowed, then you'd better know the T-SQL solution.

I certainly could be wrong, but I believe that's the point that Aaron's trying to make and I absolutely agree with that.

–Jeff Moden

🙂 Hey, I wouldn't call CLR a Porsche…

I'm curious – can you explain the reasoning behind not allowing CLR in your production servers? Security? Manageability?

Saggi, yes of course I understand that CLR can improve performance in some cases. Notice that I never said, "don't use CLR because it's slower or not suited for this task." My objection to CLR is solely because, as with a lot of readers, I do not have the luxury of just enabling and implementing CLR code in our production databases. So instead I need to solve problems using only T-SQL.

CLR is simply not something that everyone can use; I'd love to drive a Porsche or get my own private jet, but it's not always practical for everyone to do so. Even those who could make a strong argument for CLR could still be blocked by staunchy and entrenched policy.

In our environment we are always I/O-bound so saving 20% of the CPU in the rare cases where we're doing this kind of work, quite frankly, is not going to amount to squat. So while I might someday be able to say, "yeah, look how cool I am, I am using CLR!" it just doesn't have the justification to go back and re-visit this non-CLR code which is working fine and is not a bottleneck.

As always, YMMV.

Hey Aaron,

In terms of scalability and performance, the CLR approach is far superior. We've used a T-SQL split function for a long time in db with many transactions/sec and we managed to bring the cpu down in 20% by switching to the CLR approach. I hope we get to upgrade to 2008 soon to benchmark table-valued parameters in this database.

Cheers,

S. Neumann

Armando: Understood 100%. Been there, done that. Luckily, the companies that I've done work for recently have been quite flexible and I've been able to deploy some very cool (IMO, at least!) solutions using SQLCLR.

Gentlemen

I think we will have to agree to disagree on this. I think CLR is a great tool. But, as Aaron has stated and I have illustrated, it is just not an option due management mandate. It's nothing against the technology, it's that some shops (like mine) want code that can debugged quickly if the original developer were to leave. Now, in my case, I'm the only SQL person in the company so they want me just using SQL for all solutions since our devs can read SQL and can ALTER procedures if need be. I'm not saying it's right or wrong. I'm just saying that's just how it is some shops.

What I'm trying to make you realize is that sometimes when you're driving in suburban areas it's quite possible that you'll see a deer cross the road.

Are you suggesting that every person on earth who has the ability to drive must also have the ability to change a tire? Never mind the variable where they are simply prohibited from doing so. I'm sorry guys, but in 2009 you are simply not going to be able to convince every single shop to allow CLR.

Wait! What if you had puncture-resistant tires and your car was equipped with a hydrolic lift system and a robot to lift and install the new tire for you? And what if that robot was programmed using SQLCLR, and it malfunctioned while replacing the tire, and you happened to be in a remote part of New Guinea and couldn't get phone service to call AAA? What then???

Ok, I'll keep going with this analogy. What if the driver is alone and not strong enough to lift the spare? Or the manual is gone? This is the kind of roadblock that is in place when management forbids new languages, or spending time on new languages, or spending time learning how to compile code written in new languages to avoid paying otherwise unnecessary licensing fees, or simply from turning CLR on as a matter of principle.

CLR is still not for everybody, regardless of whether the individual developer would prefer to change their own tire.

I'm with Adam on the tire thing. A few weeks ago, I was so lucky to drive on a screw at the correct angle to get a flat. Because I was in a hurry to go somewhere, I decided to change it myself. Honestly, I had to read the manual to find where to put the jack. But I got it done in 30 minutes. I would never expect AAA to come around that fast. There is a lesson here for SQLCLR as well.

Nice post, thanx.

Hi Adam

Unfortunately, our technical management has dictated that we use Java and SQL only. Their reasoning? We had a developer code up some conversion routine and decided to use Python. He was the only one in the person who knew it. That developer left and then a critical bug popped up in the conversion. We had a couple of engineers scramble to figure out the code. So, now I'm mandated from using anything else.

I'm not trying to sell anything. I don't care whether people use SQLCLR, but the fact is that in some–not all–cases it's absolutely not true that they're doing "well enough" without it. Nor am I refusing to acknowledge roadblocks. To the contrary, I'm trying to help eliminate them by providing solid arguments that can be used to get that all-important buy-in when and if SQLCLR is appropriate.

And at the risk of beating this metaphor to death: Just because you can't be bothered changing your own flat doesn't mean that everyone wants to wait 90 minutes for a tow truck to show up. Some people might ENJOY getting their hands dirty from time to time.

Ok, well in that case I certainly prefer calling AAA than getting my hands dirty. After all, that's why I keep paying for my annual membership, even though it's been three or four years since I've had a flat.

Just because you don't need a VS license doesn't mean you are willing to spend resources learning a different language (regardless of how "easy enough" it might be for some, it is still a different approach than Java UI development), just to do something in SQL Server that you can already do well enough without it.

I'm sorry, but you're just not going to be able to convince the entire world to allow CLR, no matter how good a salesman you are. You are refusing to acknowledge all of the roadblocks that a <insert something other than C#> language shop is going to have, not the least of which is management – not developer – buy-in.

Aaron: These are not good reasons. VS is not required–the compilers are free to download, and you can even get a free IDE (Sharp Develop). C# and VB.NET can be learned easily enough, especially C# by Java developers; the languages are quite similar. It's not a question of "manual vs automatic"; it's closer to the question of opening the trunk and using the jack to put on a spare tire vs calling AAA and having the car towed to the dealership.

Adam, if there are no Visual Studio licenses in-house, and/or no C# or VB.Net expertise whatsoever, it makes it that much tougher to make a case for CLR. I'm a big fan of manual transmission, but I am never going to be able to convince all of my colleagues to switch from automatic.

Uri, both UDFs I posted above will support SQL Server 2000, with one limitation: you need to change VARCHAR(MAX) to VARCHAR(8000), and support a smaller potential list size. I imagine with a multi-statement function you could dump a TEXT variable into a table variable and parse that, but that doesn't sound much fun to me. I'd rather upgrade. 🙂

Uri, yes, this is what I use in production. I have a variation for strings that maintains the order in the original list, but that is a multi-statement function as opposed to the inline TVF above.

Armando: I don't understand why a "strict Java-only shop" can't use SQLCLR. Java-only can't possibly apply if you're using SQL Server; you're allowed to use T-SQL inside SQL Server, right? So why can't you use C# or VB.NET inside SQL Server? Shouldn't Java-only only apply to app code?

Hi Aaron

And for those who still use SQL Server 2000 the last UDF is a solution.

Is it also your approach Aaron?

I've used Erland's approach but I like yours a bit more, Aaron. As I read the article I thought "Damn, why didn't I think of that?". And I concur, my company is a strict Java-only shop so CLR based solutions are out.

Adam & Alex,

Yes, if you are going for absolute performance, then certainly CLR, padding and packing should be among your tests. For most bang for the buck and lowest common denominator, I shy away from these because:

(a) not all shops allow CLR.

(b) client application code (at least from web-based forms) builds comma-separated lists by default, so dealing with these means no change to the application(s) in a lot of cases.

(c) a lot of folks can't wrap their heads around the code that packs numbers into a blob.

Due to a recent thread in the newsgroups, I also wanted to show what I was actually using in production right now, and how just about *any* of these solutions is better than Celko's n parameters approach.

Aaron,

Well, yes, this is a very common topic. SOme time ago I needed to transfer up to 100K numbers from my client to my server many times a day. Did a lot of benchmarking on 2005. It was simpler and faster to deal with fixed width that with comma separated.For instance, instead of smallints '1,2,23,345' it was faster to parse five characters per number: ' 1 2 23 345'. It was even faster to pack numbers in binary form into an image aka VARBINARY(MAX). There were some gotchas, like you have to reverse order of bytes in binary representation, but it was much faster on the server and used less network packets. Erland was kind enough to include my code which packs numbers into a binary blob in his article.

Might as well toss in my own approach:

http://sqlblog.com/blogs/adam_machanic/archive/2009/04/28/sqlclr-string-splitting-part-2-even-faster-even-more-scalable.aspx

🙂

This is quite a common requirement – I'm sure a number of people will find this post useful. 🙂

There is some discussion about this in a previous SQLBlog post:

http://sqlblog.com/blogs/peter_debetta/archive/2007/02/12/multiple-valued-parameters.aspx

Erland Sommarskog article seems pretty comprehensive. Good stuff.